Jae Myung Kim

I am a PhD student at the University of Tübingen and a member of ELLIS & IMPRS-IS programs advised by Zeynep Akata (TU Munich/Helmholtz Munich), and closely working with Cordelia Schmid (Inria/Google). Previously, I completed my B.S. and M.S. degrees at the Seoul National University. I am broadly interested in efficient data-centric approaches. At the moment I'm working on the following questions:

|

|

Selected papers |

|

Synthetic Training Data

Few-shot Learning

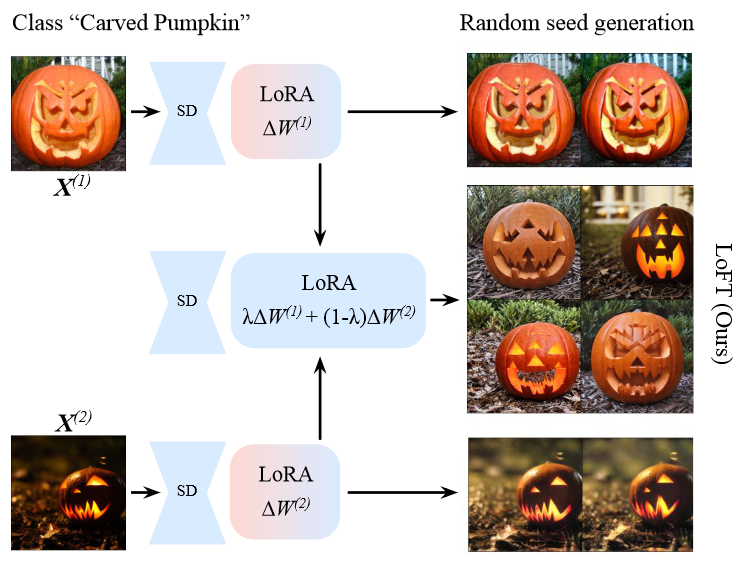

LoFT: LoRA-Fused Training Dataset Generation with Few-shot GuidanceJae Myung Kim, Stephan Alaniz, Cordelia Schmid, Zeynep Akata BMVC 2025 arxiv / code / We generate a synthetic dataset guided by few-shot real samples by fusing LoRA weights corresponding to each real sample. We achieve to generate datasets with high fidelity and sufficient diversity which contribute to performance improvement. |

|

Synthetic Training Data

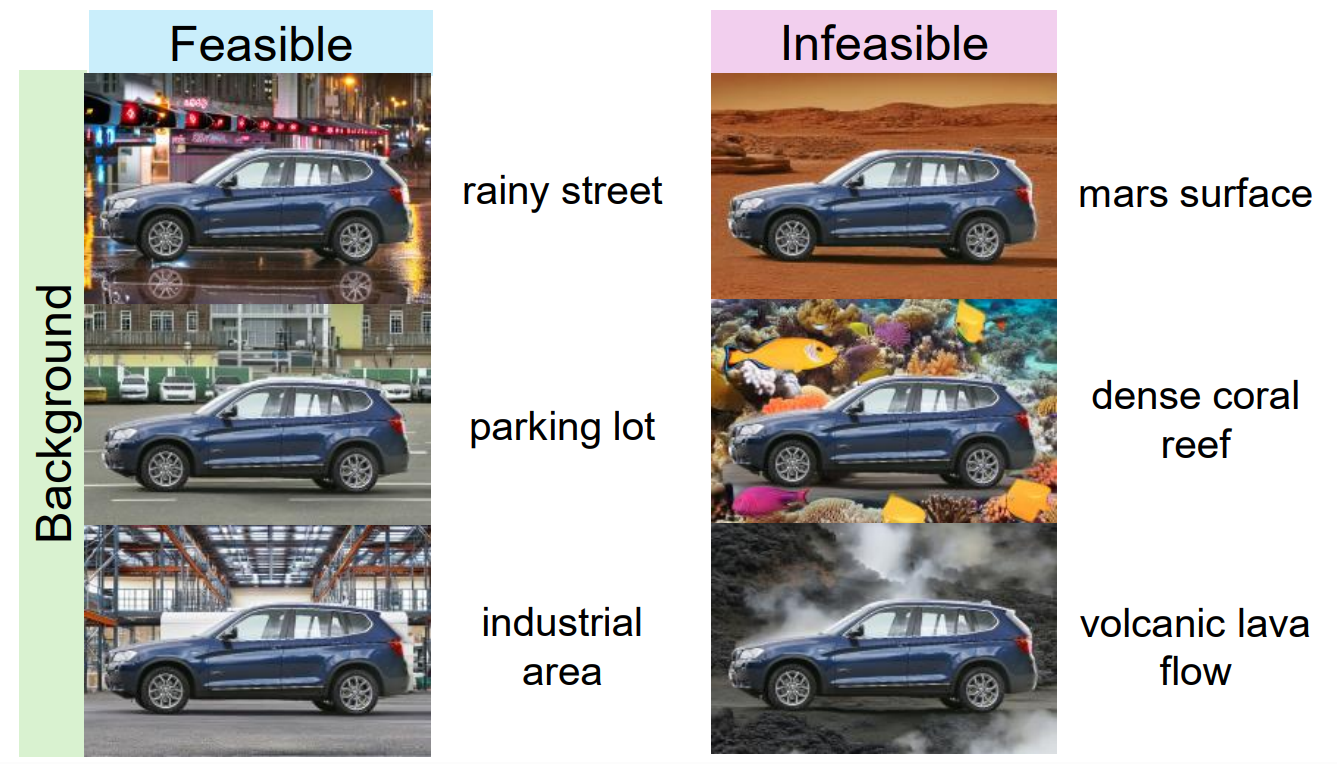

Does Feasibility Matter? Understanding the Impact of Feasibility on Synthetic Training DataYiwen Liu, Jessica Bader, Jae Myung Kim CVPRW on SynData4CV & FGVC12 2025 ( Best Paper Award)

arxiv / code / We study whether feasibility matters when training classifiers with synthetic data. We conduct experiments on three different attributes: background, color, and texture. |

|

Synthetic Training Data

Few-shot Learning

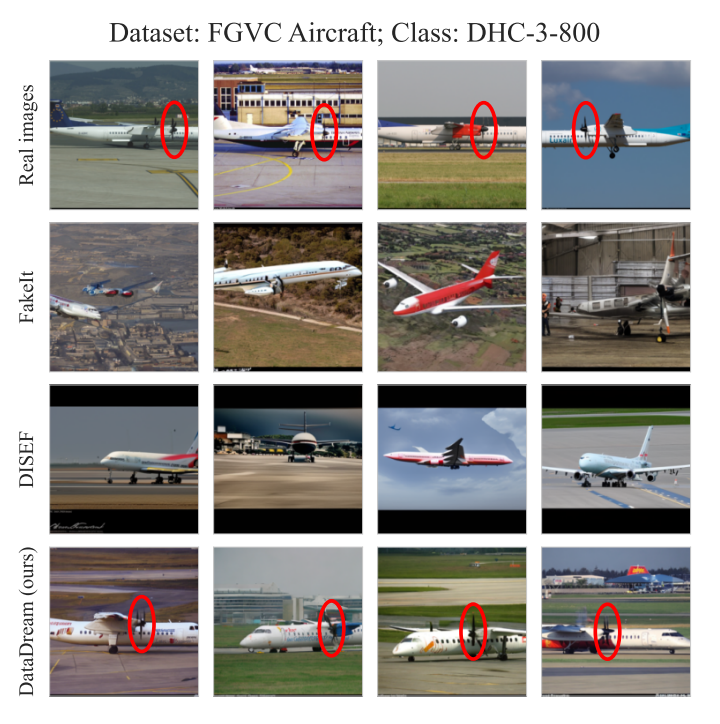

DataDream: Few-shot Guided Dataset GenerationJae Myung Kim*, Jessica Bader*, Stephan Alaniz, Cordelia Schmid, Zeynep Akata ECCV 2024 arxiv / code / We generate a synthetic dataset guided by few-shot real samples, which more faithfully represents the real data distribution of the targeted classification task. |

|

Explainability

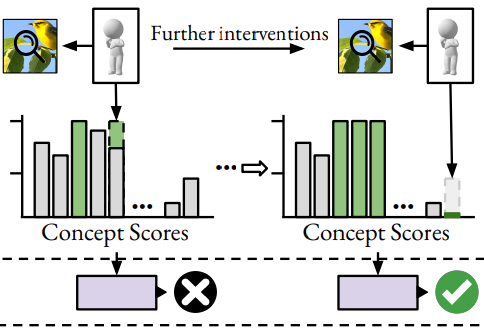

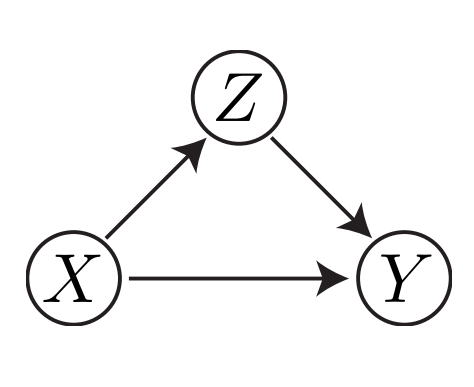

Improving Intervention Efficacy via Concept Realignment in Concept Bottleneck ModelsNishad Singhi, Jae Myung Kim, Karsten Roth, Zeynep Akata ECCV 2024 arxiv / code / We learn concept relations to realign concept assignments post-intervention in CBMs. This effectively reduces the number of necessary interventions to reach a target performance. |

|

Zero-shot Learning

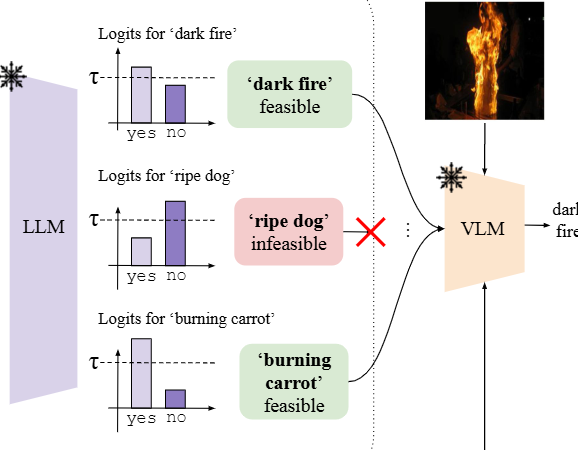

Feasibility with Language Models for Open-World Compositional Zero-Shot LearningJae Myung Kim, Stephan Alaniz, Cordelia Schmid, Zeynep Akata ECCV workshop 2024 arxiv / We leverage LLMs to determine the feasibility of state-object combinations for open-world compositional zero-shot learning task. |

|

Zero-shot Learning

Waffling around for Performance: Visual Classification with Random Words and Broad ConceptsKarsten Roth*, Jae Myung Kim*, A. Sophia Koepke, Oriol Vinyals, Cordelia Schmid, Zeynep Akata ICCV 2023 arxiv / code / We achieve comparable zero-shot CLIP performance without access to external models by using random characters and random word descriptors. |

|

Bias

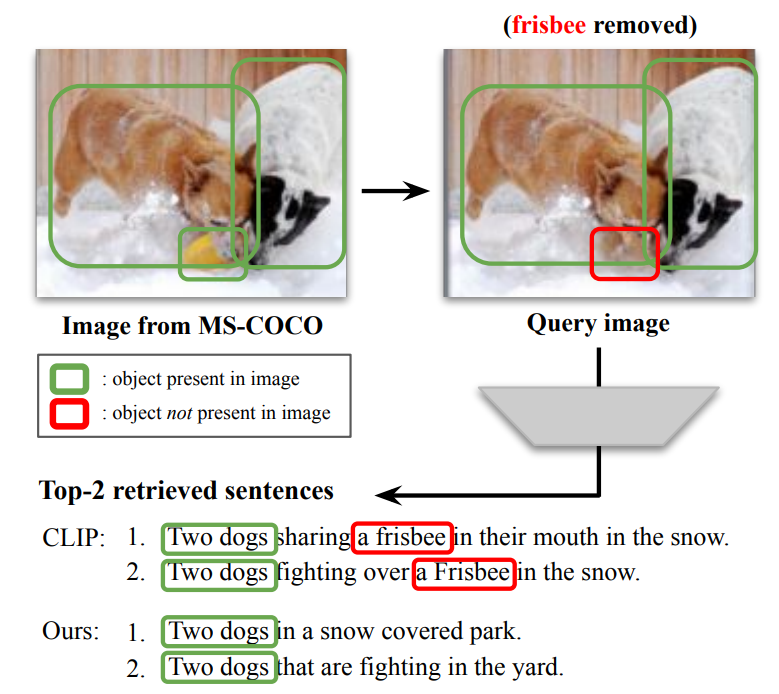

Synthetic Training Data

Exposing and Mitigating Spurious Correlations for Cross-Modal RetrievalJae Myung Kim, A. Sophia Koepke, Cordelia Schmid, Zeynep Akata CVPR Workshop 2023 arxiv / We find that image-text retrieval models commonly learn to memorize spurious correlations in the training data. We introduce a metric that measures a model’s robustness to spurious correlations in the training data, and de-bias those models by finetuning them with the controlled synthetic dataset. |

|

Weak Alignment

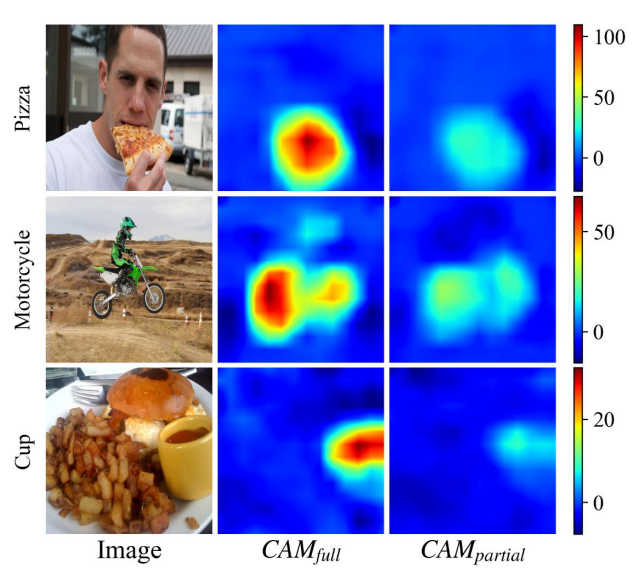

Explainability

Bridging the Gap between Model Explanations in Partially Annotated Multi-label ClassificationYoungwook Kim, Jae Myung Kim, Jieun Jeong, Cordelia Schmid, Zeynep Akata, Jungwoo Lee CVPR 2023 arxiv / code / We observe that the explanation of two models, trained with full and partial labels each, highlights similar regions but with different scaling. We then propose to boost the attribution scores of the model trained with partial labels to make its explanation resemble that of the model trained with full labels. |

|

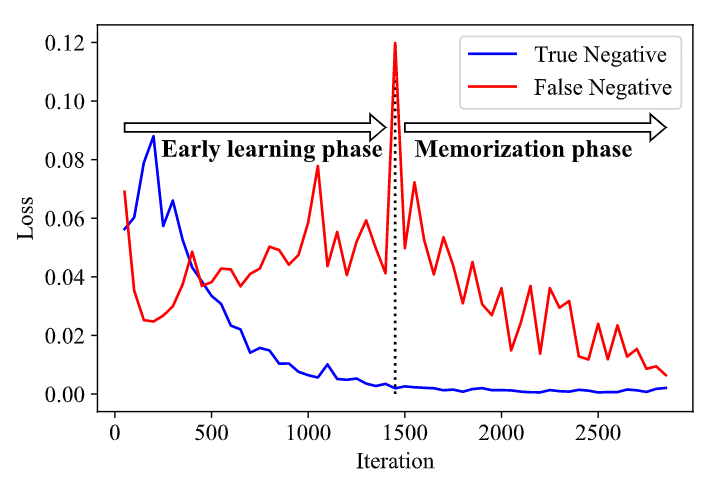

Weak Alignment

Large Loss Matters in Weakly Supervised Multi-Label ClassificationYoungwook Kim*, Jae Myung Kim*, Zeynep Akata, Jungwoo Lee CVPR 2022 arxiv / code / We frame the partially labeled setting as a noisy multi-label classification task, and observe the memorization effect. We then propose to reject or correct high-loss samples, preventing the memorization of noise. |

|

Explainability

Keep CALM and Improve Visual Feature AttributionJae Myung Kim*, Junsuk Choe*, Zeynep Akata, Seong Joon Oh ICCV 2021 arxiv / code / Class Activation Mapping (CAM) is widely used for visual feature attribution, but its reliance on ad-hoc calibration steps outside the training graph limits its interpretability. We address this issue by introducing a latent variable for cue location, an explanation by itself, in the training graph. |

|

Design and source code from Leonid Keselman's website |